Overview

In many real-world applications where specifying a proper reward function is difficult, it is desirable to learn

policies from expert demonstrations. Adversarial Inverse Reinforcement Learning (AIRL) is one of the most common

approaches for learning from demonstrations. However, due to the stochastic policy, current computation graph of

AIRL is

no longer end-to-end differentiable like Generative Adversarial Networks (GANs),

resulting in the need for high-variance gradient estimation methods and large sample size.

In this work, we propose the Model-based Adversarial Inverse Reinforcement Learning (MAIRL), an end-to-end

model-based

policy optimization method with self-attention. Considering the problem of learning robust reward and policy from

expert

demonstrations under learned environment dynamics. MAIRL has the advantage of the low variance for policy

updating, thus

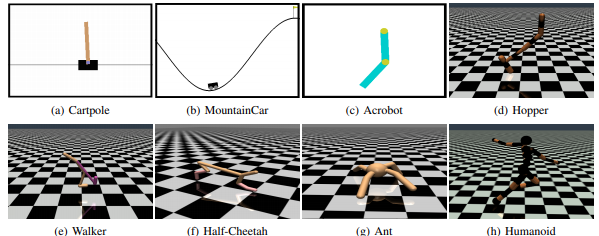

addressing the key issue of AIRL. We evaluate our approach thoroughly on various control tasks as well as the

challenging transfer learning problems where training and test environments are made to be different.

The results show that our approach not only learns near-optimal rewards and policies that match expert behavior

but also

outperforms previous inverse reinforcement learning algorithms in real robot experiments.

BibTeX

@ARTICLE{sun2021adversarial,

author={J. {Sun} and L. {Yu} and P. {Dong} and B. {L} and B. {Zhou}},

journal={IEEE Robotics and Automation Letters},

title={Adversarial Inverse Reinforcement Learning with Self-attention Dynamics Model},

year={2021},

}

Related Work

-->