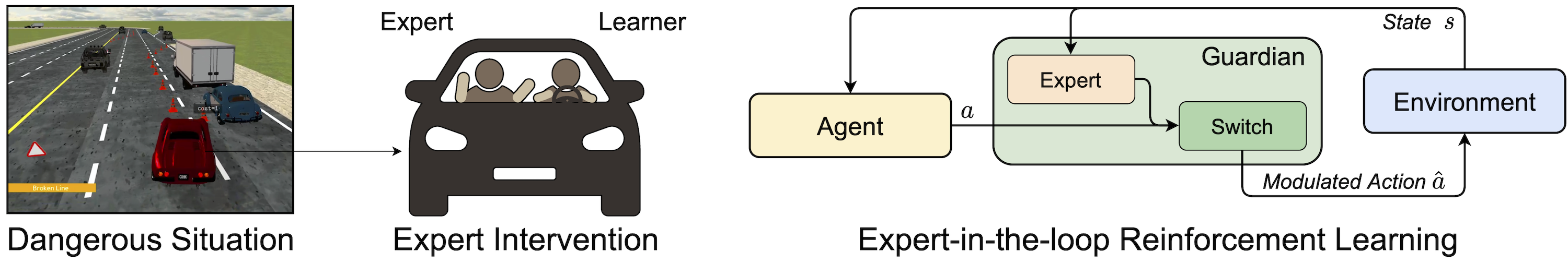

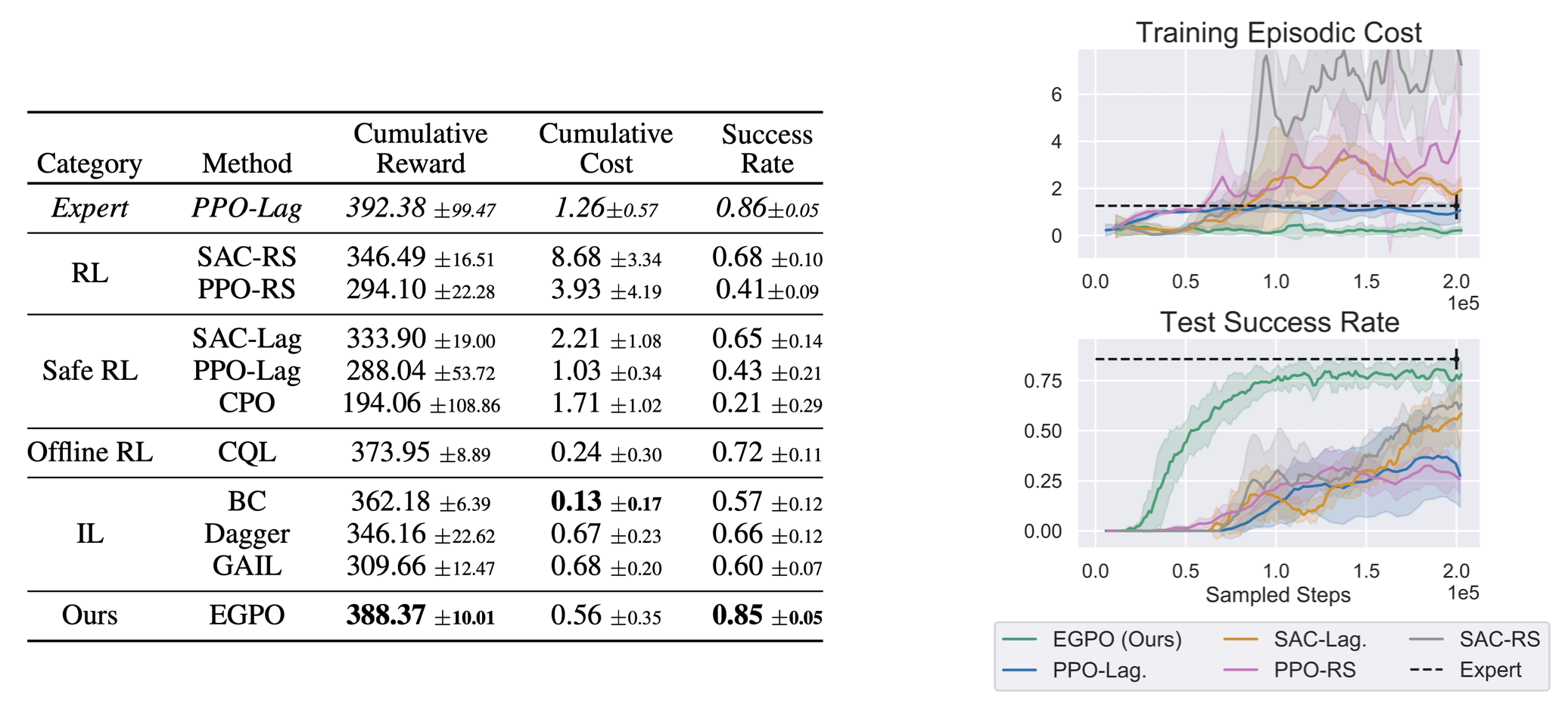

We develop a novel Expert Guided Policy Optimization (EGPO) method, integrating the guardian in the loop of RL, which contains an expert policy and a switch function to decide when to intervene. We utilize constrained optimization and offline RL techniques to tackle trivial solutions and improve the learning on expert's demonstrations. Safe driving experiments show that our method achieves superior training and test-time safety, sample efficiency, and generalizability.

1The Chinese University of Hong Kong,

2SenseTime Research

3Centre for Perceptual and Interactive Intelligence

3Centre for Perceptual and Interactive Intelligence

| Webpage | Code | Poster | Paper |